They are:

1) Pagination of the data and use of JRDataSource,

2) Viruatization of the report.

When there is a huge dataset, it is not a good idea to retrieve all the data at one time.The application will hog up the memory and you're application will go out of memory even before coming to the jasper report engine to fill up the data.To avoid that, the service layer/Db layer should return the data in pages and you gather the data in chunks and return the records in the chunks using JRDataSource interface, when the records are over in the current chunk, get the next chunk untilall the chunks gets over.When I meant JRDataSource, do not go for the Collection datasources, you implement the JRDataSource interface and provide the data through next() and getFieldValue()To provide an example, I just took the "virtualizer" example from the jasperReports sampleand modified a bit to demonstrate for this article.To know how to implement the JRDataSource, Have a look at the inner class "InnerDS" in the example.

Even after returning the data in chunks, finally the report has to be a single file.Jasper engine build the JasperPrint object for this. To avoid the piling up of memory at this stage, JasperReports provided a really cool feature called Virtualizer. Virtualizer basically serializes and writes the pages into file system to avoid the out of memory condition. There are 3 types of Virtualizer out there as of now. They are JRFileVirtualizer, JRSwapFileVirtualizer, and JRGzipVirtualizer.JRFileVirtualizer is a really simple virtualizer, where you need to mention the number of pages to keep in memory and the directory in which the Jasper Engine can swap the excess pages into files. Disadvantage with this Virtualizer is file handling overhead. This Virtualizer creates so many files during the process of virtualization and finally produces the required report file from those files.If the dataset is not that large, then you can go far JRFileVirtualizer.The second Virtualizer is JRSwapFileVirtualizer, which overcomes the disadvantage of JRFileVirtualizer. JRSwapFileVirtualizer creates only one swap file,which can be extended based on the size you specify. You have to specify the directory to swap, initial file size in number of blocks and the extension size for the JRSwapFile. Then while creating the JRSwapFileVirtualizer, provide the JRSwapFile as a parameter, and the number of pages to keep in memory. This Virtualizer is the best fit for the huge dataset.The Third Virtualizer is a special virtualizer which does not write the data into files, instead it compresses the jasper print object using the Gzip algorithm and reduces the memory consumption in the heap memory.The Ultimate Guide of JasperReports says that JRGzipVirtualizer can reduce the memory consumption by 1/10th. If you are dataset is not that big for sure and if you want to avoid the file I/O, you can go for JRGzipVirtualizer.

Check the sample to know more about the coding part. To keep it simple, I have reused the "virtualizer" sample and added the JRDataSource implementation with paging.I ran the sample that I have attached here for four scenarios. To tighten the limits to get the real effects, I ran the application with 10 MB as the max heap size (-Xmx10M).

1a) No Virtualizer, which ended up in out of memory with 10MB max heap size limit.

export:

[java] Exception in thread "main" java.lang.OutOfMemoryError: Java heap space

[java] Java Result: 1

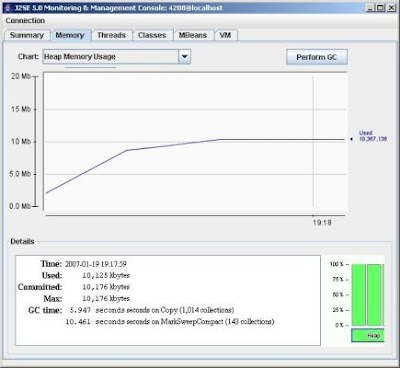

1b) No Virtualizer with default heap size limit (64M)

export2:

[java] null

[java] Filling time : 44547

[java] PDF creation time : 22109

[java] XML creation time : 10157

[java] HTML creation time : 12281

[java] CSV creation time : 2078

2) 2) With JRFileVirtualizer

exportFV:

[java] Filling time : 161170

[java] PDF creation time : 38355

[java] XML creation time : 14483

[java] HTML creation time : 17935

[java] CSV creation time : 5812

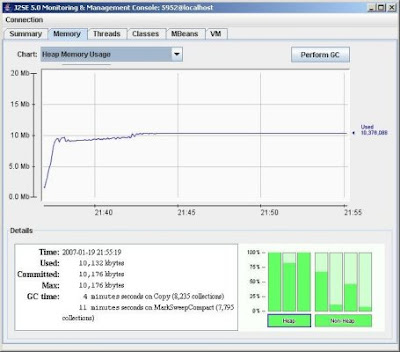

3) With JRSwapFileVirtualizer

exportSFV:

[java] Filling time : 51879

[java] PDF creation time : 32501

[java] XML creation time : 14405

[java] HTML creation time : 16579

[java] CSV creation time : 5365

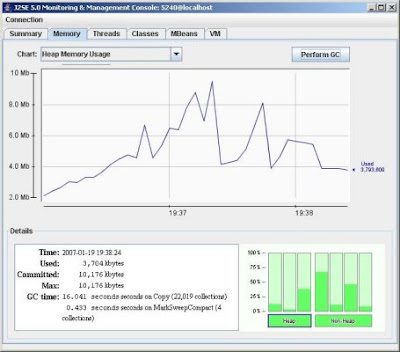

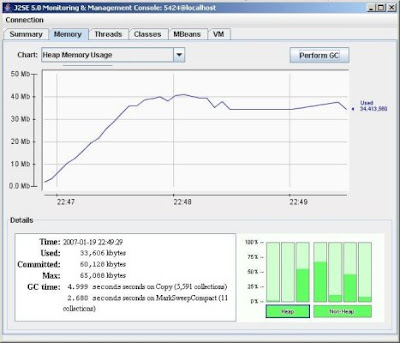

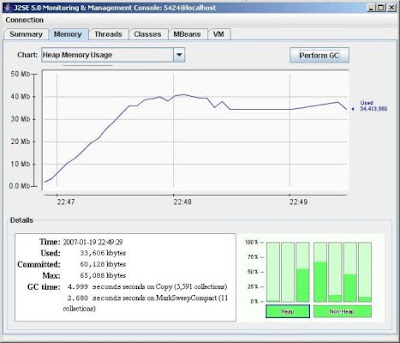

4a) With GZipVirtualizer with lots of GC

exportGZV:

[java] Filling time : 84062

[java] Exception in thread "RMI TCP Connection(22)-127.0.0.1" java.lang.OutOfMemoryError: Java heap space

[java] Exception in thread "RMI TCP Connection(24)-127.0.0.1" java.lang.OutOfMemoryError: Java heap space

[java] Exception in thread "main" java.lang.OutOfMemoryError: Java heap space

[java] Exception in thread "RMI TCP Connection(25)-127.0.0.1" java.lang.OutOfMemoryError: Java heap space

[java] Exception in thread "RMI TCP Connection(27)-127.0.0.1" java.lang.OutOfMemoryError: Java heap space

[java] Java Result: 1

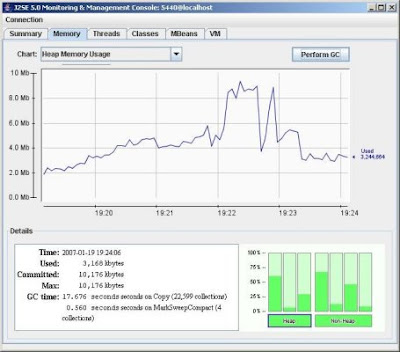

4b) With GZipVirtualizer (max: 13MB)

exportGZV2:

[java] Filling time : 59297

[java] PDF creation time : 35594

[java] XML creation time : 16969

[java] HTML creation time : 19468

[java] CSV creation time : 10313

I have shared the updated virtualizer sample files at Updated Virtualizer Sample files

1 comment:

any source code link?

Post a Comment